Keep up to date with our innovative initiatives.

Sign up here

Building on the advancements of the XGain project, integrating the Mobile Object Tracking Camera (MOTCAM) solution marks a significant step forward in Artificial Intelligence (AI)-powered object detection for 5G-enabled drone operations. Designed to overcome rural connectivity challenges, this innovation leverages cutting-edge computing and AI-based analytics to enhance precision agriculture, environmental monitoring, and security applications.

MOTCAM: AI-Powered Object Detection at the Edge

MOTCAM is an AI-based, modular and scalable real-time object detection system. It was initially developed under the PAI-Mobility project and has been adapted for XGain. With the AI processing executed on a Jetson Orin device deployed at the edge of a private 5G network, MOTCAM delivers real-time object detection, identifying humans and vehicles directly from video feeds. Key components include:

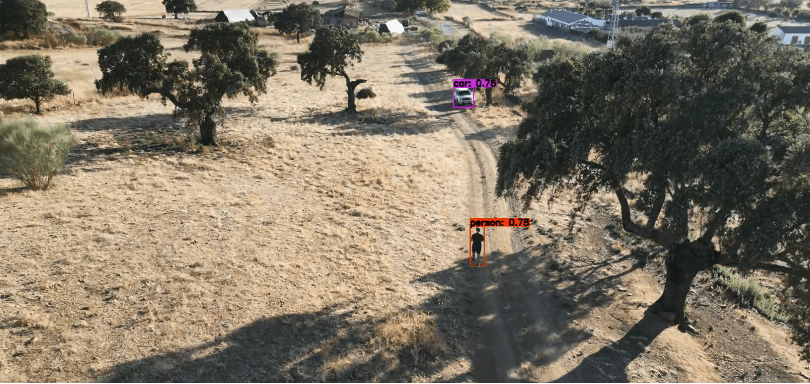

Fig.1 Real-time object detection with MOTCAM, identifying humans and vehicles in a rural environment using edge AI processing.

Advanced Drone Hardware for Real-Time Object Detection

The XGain project leverages cutting-edge drone technology to enable real-time AI-powered object detection with MOTCAM. The proposed solution allows seamless video streaming from the drone, which captures and sends the video to the edge node, enabling uninterrupted real-time tracking. Key components include:

From Lab to Field: Validating the Setup

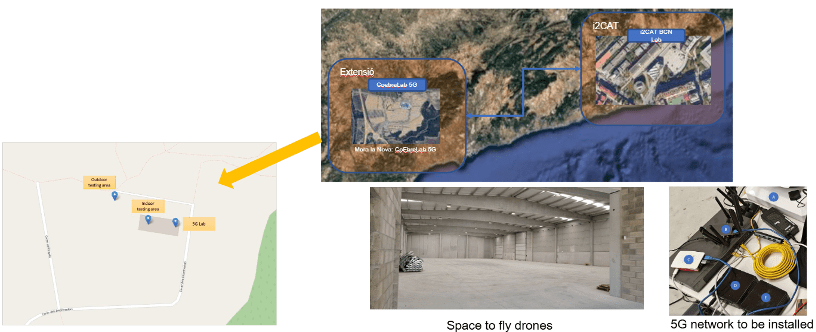

Before deploying MOTCAM in real-world scenarios, a series of controlled tests were conducted at the 5G Coebre Lab in Mora la Nova, Catalonia, as part of the use case implemented by i2CAT. The trials consisted of streaming video from the drone to the edge and were focused on evaluating key metrics such as network latency, throughput, and detection accuracy. The results showed good video quality with minimal CPU usage, as the system avoided re-encoding the video stream. While the initial tests revealed a slight delay (1–1.5 seconds) in video transmission, they provided crucial insights for optimizing the streaming pipeline. On the other hand, with deep neural networks running on Jetson Orin, the system achieved 16 FPS with GPU acceleration, ensuring high-speed object detection across various video feeds.

Fig. 2 MOTCAM validation for 5G-enabled drone operations at the 5G CoEbre Lab.

In subsequent optimizations on both the video relaying at the Raspberry Pi and the edge processing, the end-to-end delay could be optimized. Further, with the proper visualization tools, i.e., directly visualizing the post-processed stream at the Jetson Orin device, an overall end-to-end delay between capturing the image and successful object detection of around 315 ms could be observed. This near real-time processing allows drone operators to react quickly to a positive detection.

Next Steps: Live Demonstration in 2025

A full-scale demonstration is scheduled for April 2025, showcasing MOTCAM’s real-time ability to detect lost or injured individuals. With Persualdrone overseeing flight operations and i2CAT managing network integration, this event will highlight the transformative potential of AI-powered and 5G-enabled drone analytics. This milestone will establish a scalable model for rural drone centers, demonstrating how drones equipped with 5G and using AI can bridge the digital divide and enhance connectivity in remote areas.