Keep up to date with our innovative initiatives.

Sign up here

The i2CAT Foundation kicks off PRESENCE (A toolset for hyper-realistic and XR-based human-human and human-machine interactions), a European project oriented at providing intuitive and hyper-realistic Extended Reality (XR) experiences by bringing real humans into interactive virtual worlds. This three-year research project officially started on the 1st of January 2024 and is funded with more than 7 million euros by the European Commission within the Horizon Europe funding program. The project brings together 17 European partners, including research centres, universities and technological companies.

Within the project, researchers will try to reach the next level of realism for XR devices and applications. Focusing on a human-centred approach, they will deliver a toolset of technologies such as holoportation, haptics and virtual humans to enhance the feeling of presence for the end-users in virtual scenarios. All the solutions will intrinsically involve understanding the ethics of the solutions and end-users’ safety and privacy.

One of the key aspects of PRESENCE is to digitalize and transfer humans into shared virtual worlds, simultaneously and with multiple people remotely connected, offering a more intuitive and realistic experience. For that, researchers will develop a photorealistic multiuser holoportation system for multiple users in real-time communications scenarios. The system requires improving the current volumetric video compression technologies for live communications. The second key aspect of the project is to enrich XR experiences at a multisensory level by exploring novel haptic technologies, enabling remote interactions among users and between users and objects. Toward this goal, researchers will develop novel tactile tracking and capturing systems to interpret properties such as form, texture or velocity. Finally, PRESENCE aims at generating realistic 3D humanoid models for intelligent virtual agents and embodied smart avatars. Especially the latter, will be used to realistically represent humans in the virtual world. Such models require AI-powered intelligent virtual humans (e.g., via human speech, facial expression, gaze, body language, etc.), allowing natural appearances and physically realistic behaviours in the virtual world.

“The next level of human-to-human and human-machine interaction in virtual worlds will be reached in a way never witnessed before. Thanks to hyper-realistic holograms and avatars, remotely connected people will be able to see and hear each other in an immersive way and to feel each other, thanks to the integration of haptics, thus providing a strong illusion of co-presence”, explains Gianluca Cernigliaro, VR/AR technical director at i2CAT and the project’s coordinator.

©Vection Technologies. Reproduction prohibited without authorization

XR applied to professional and social scenarios

The technologies developed within PRESENCE will be validated in realistic scenarios, targeting XR applications for professional and social setups and addressing four use cases in professional collaboration, manufacturing, health, and cultural heritage.

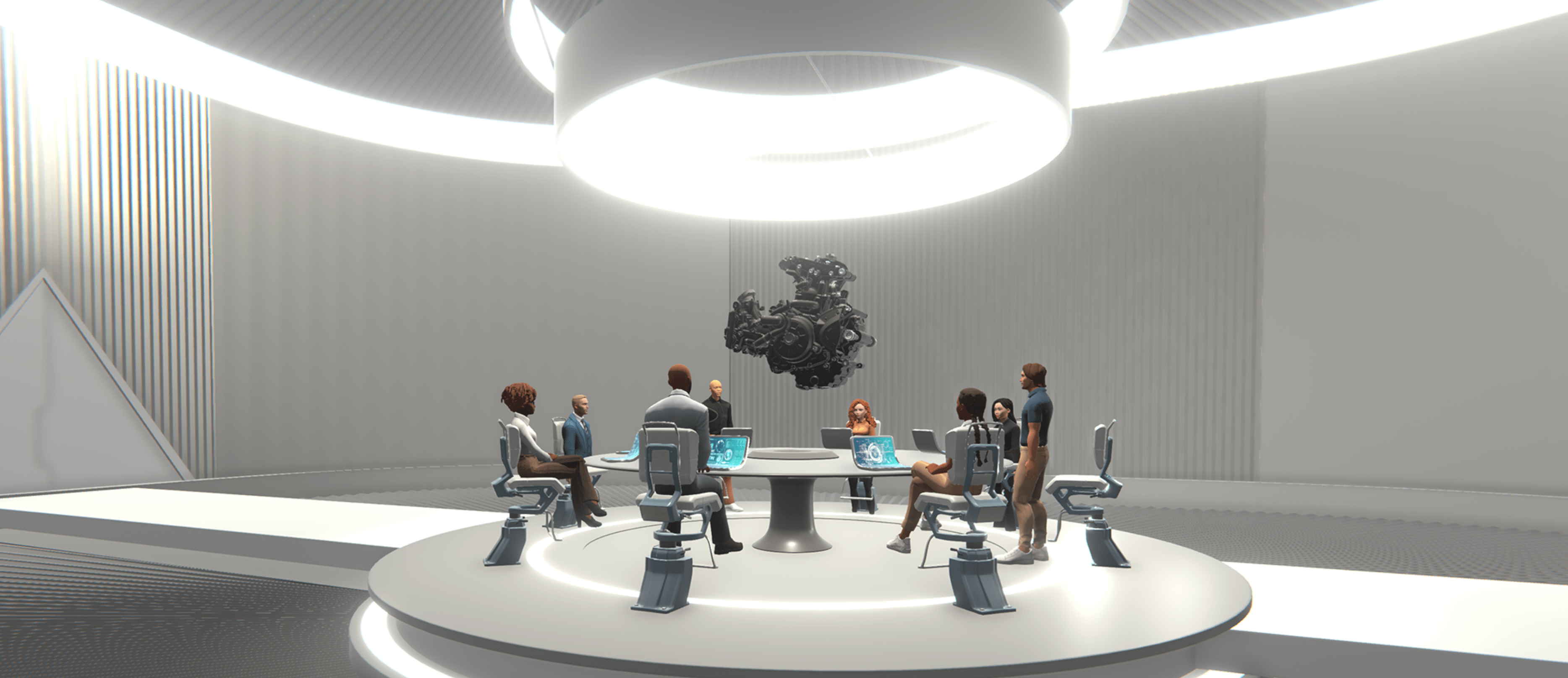

Within the professional collaboration use case, workers will meet holoported, or as remotely controlled avatars, in immersive meeting rooms equipped with virtual collaborative tools such as boards, interactive models and document-handling gadgets. Researchers will also integrate haptic technologies to enhance remote collaboration, allowing multisensory interaction and improving tactile sensations, such as touching different materials.

The manufacturing use case is motivated by the poor health and safety outcomes for training in some industries which demand heavy machinery, like hydraulic presses or metal shearing machines. XR training scenarios allow for the simulation of real danger and enable personalized learning outcomes. Researchers will use tactile technologies to reproduce crucial manoeuvres involving force, weight, and pressure. The instructor will be reproduced with photorealistic quality thanks to a holoportation system, and the other participants will be represented as 3D avatars in a manufacturing virtual scenario.

The health use case will try to implement the assistance of a physiotherapist into an XR scenario to allow greater accessibility to the patients. Physiotherapists will be able to engage with the patients through a multiuser, real-time holoportation pipeline in which users without volumetric capturing systems will be represented as 3D avatars capable of reproducing the movements thanks to physics-based animations. Also, users will be able to use haptic globes to engage in much more finely tuned exercises.

Finally, the cultural heritage use case will enable users to experience sights remotely with a realistic sense of being there. Tourists and tour guides will be able to virtually travel together through Europe’s more important cultural sights and meet local historical characters. For that, researchers will develop virtual 3D reconstructions of relevant sights, and users will be represented as holograms or realistic 3D avatars who can perfectly reproduce the movements and interact with the tour guide, seamlessly projected using the real-time holoportation pipeline.